NVIDIA’s GPU Technology Conference (GTC, held April 8-12, 2017) is this editor’s favorite conference/trade show, having attended 7 GTCs since NVISION ’08. The GTC is an important trade conference that is represented by every major auto maker, most major labs, and every major technology company.

Although it costs $660 for a single day pass to this 5-day conference, it gets far more crowded with each successive year with about 7,000 attending this event – up 1,500 over last year’s GTC and with over 20,000 attendees at other worldwide GTC events last year. Yet there was no emphasis placed on the GPU for gaming which is half of NVIDIA’s business. No consumer media press were invited as this GTC was simply not about GeForce. However, this GTC 2017 was absolutely fascinating from this gamer’s perspective because of the release of new architecture with Volta GV100, and perhaps we can even speculate somewhat about NVIDIA’s future GeForce roadmap by analyzing their recent pattern of GPU architecture releases and the timing of GeForce video cards releases.

The GTC is all about the GPU and harnessing its massive parallel power to significantly improve the way humans compute and play games. This year, the emphasis continues to be on AI and on deep learning. A new Volta architecture was announced by NVIDIA’s CEO Jensen during last Wednesday’s keynote featuring GV100 Tesla for high performance computing (HPC), and gamers want to know if Volta GeForce gaming GPUs and video cards are far behind. We have to say, perhaps yes, if Pascal’s GP100 launch at last year’s GTC is any indication.

First, let’s take a look at GTC 2017 where additional emphasis has been placed on serving the scientific community because of the explosion in research and development of programs focusing on artificial intelligence (AI). AI requires deep learning programming which means that machines need to be able to self-learn by analyzing massive data sets and they must be able to draw accurate conclusions. And these massive data sets require faster and faster GPUs to process them more quickly. These GPUs are co-processors with the CPU, and they are basically the same whether used for AI or for playing PC games.

Although Pascal’s GP100 was released at last years GTC 2016 and the Pascal architecture is only one year old, we now have Volta architecture and GV100 released at GTC 2017! We already knew that Summit is a supercomputer being developed by IBM for use at Oak Ridge National Laboratory to replace Titan that will be powered by IBM’s POWER9 CPUs and Nvidia Volta GPUs and it will be finished in 2017. However, it is pretty exciting to realize that GV100 will actually be available to buy next quarter for HPC.

Although Pascal’s GP100 was released at last years GTC 2016 and the Pascal architecture is only one year old, we now have Volta architecture and GV100 released at GTC 2017! We already knew that Summit is a supercomputer being developed by IBM for use at Oak Ridge National Laboratory to replace Titan that will be powered by IBM’s POWER9 CPUs and Nvidia Volta GPUs and it will be finished in 2017. However, it is pretty exciting to realize that GV100 will actually be available to buy next quarter for HPC.

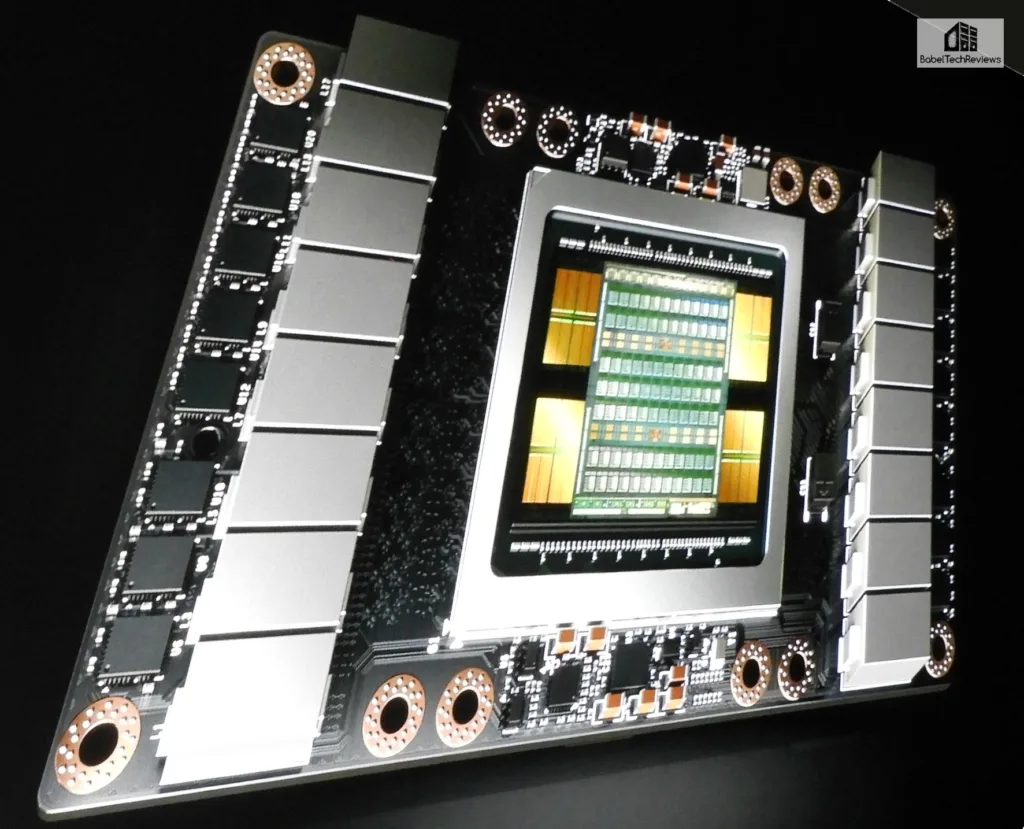

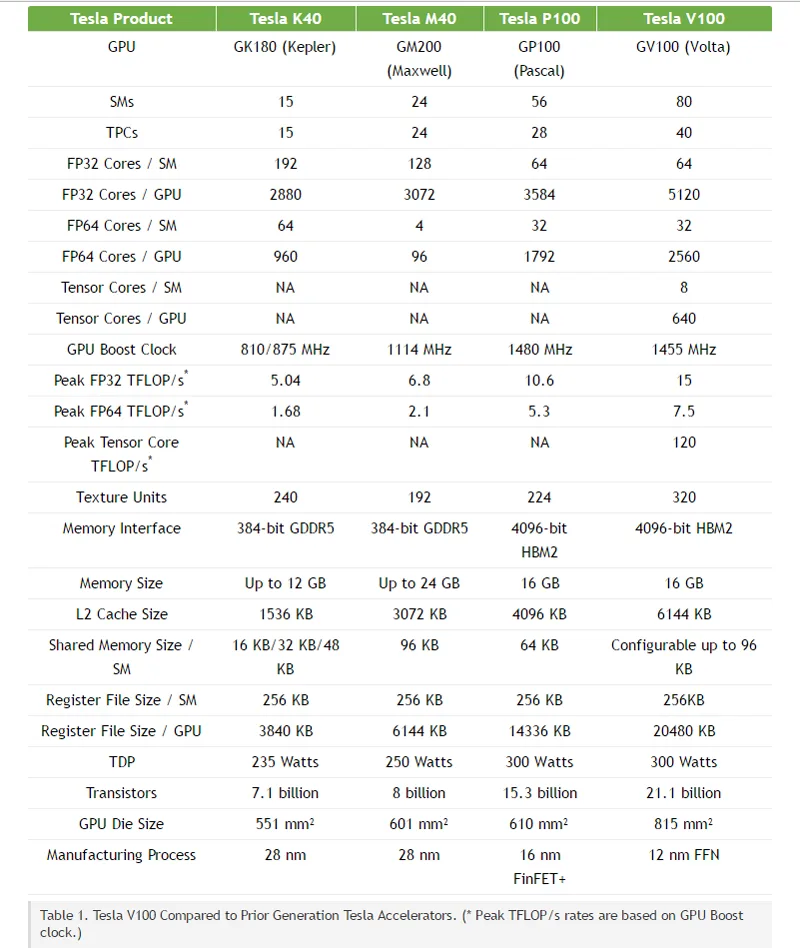

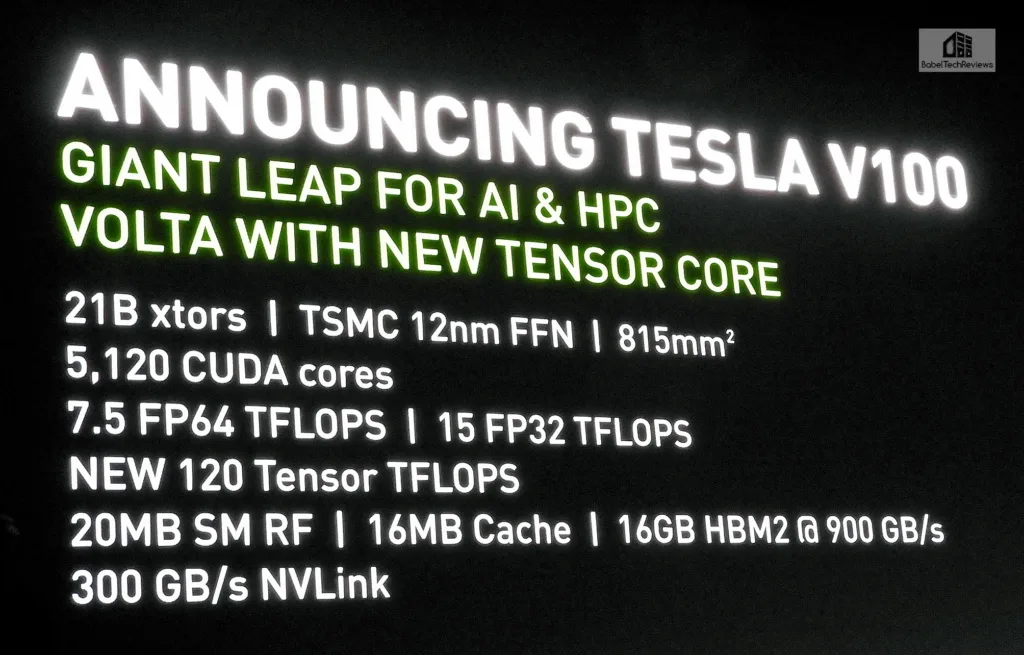

The new Volta specifications are impressive – compare Volta’s GV100 with Pascal’s GP100 and Maxwell’s GM200 before it from NVIDIA’s chart.  The image below from NVIDIA’s site shows a full GV100 GPU with 84 SMs. Just as with previous architecture, a GPU may use different configurations of the full GPU. For example, the Tesla V100 accelerator uses 80 SMs. A full GV100 GPU has a total of 5376 FP32 cores, 5376 INT32 cores, 2688 FP64 cores, 672 Tensor Cores, and 336 texture units. Each memory controller works with 768 KB of L2 cache for a total of 6144 KB of L2 cache in the full chip, and each HBM2 DRAM stack is controlled by a pair of memory controllers.

The image below from NVIDIA’s site shows a full GV100 GPU with 84 SMs. Just as with previous architecture, a GPU may use different configurations of the full GPU. For example, the Tesla V100 accelerator uses 80 SMs. A full GV100 GPU has a total of 5376 FP32 cores, 5376 INT32 cores, 2688 FP64 cores, 672 Tensor Cores, and 336 texture units. Each memory controller works with 768 KB of L2 cache for a total of 6144 KB of L2 cache in the full chip, and each HBM2 DRAM stack is controlled by a pair of memory controllers.

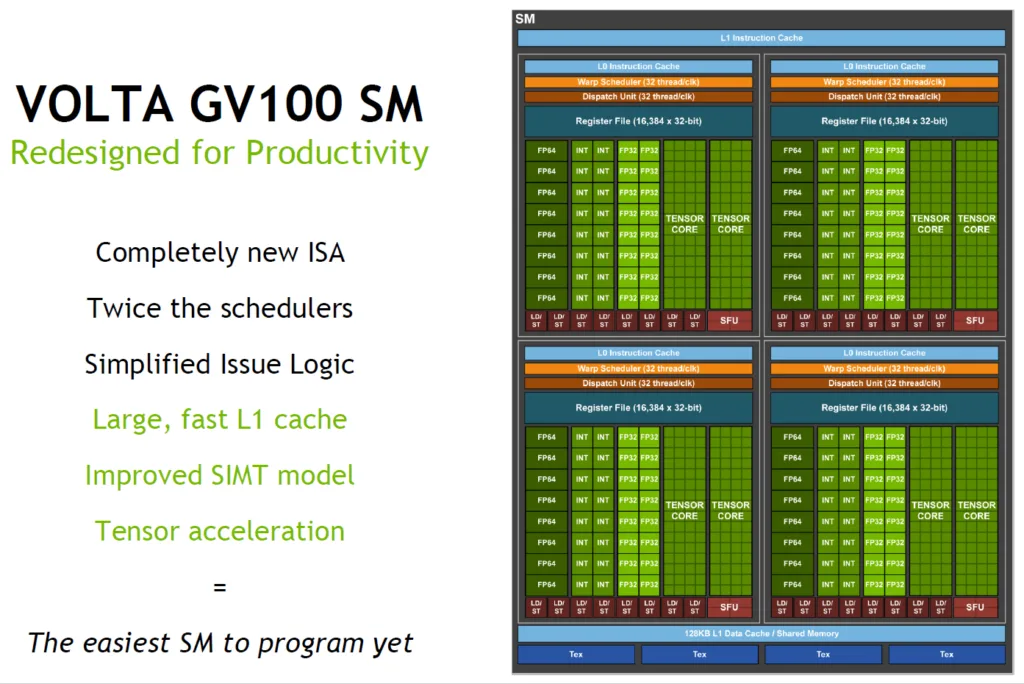

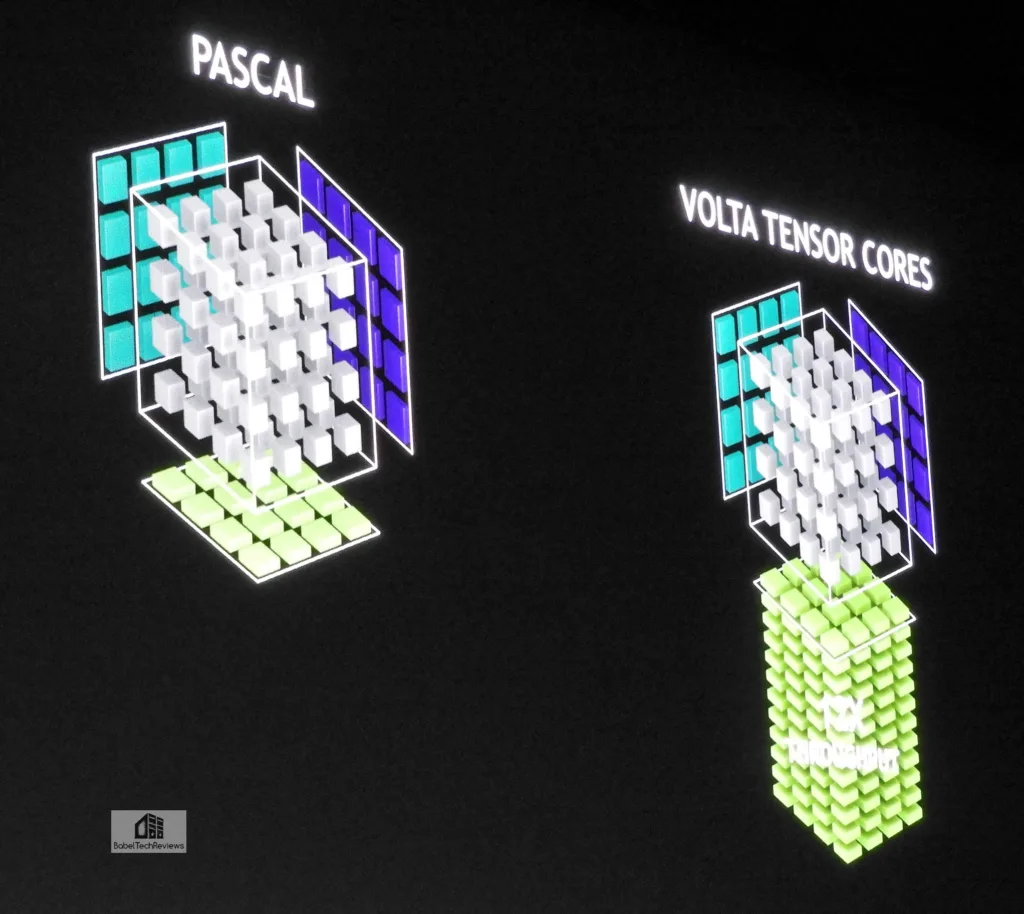

Here is the SM diagram below which has been redesigned and improved from Pascal architecture. Volta architecture appears to be a pretty significant departure from Pascal architecture, and perhaps NVIDIA’s biggest change to their architecture since Fermi.

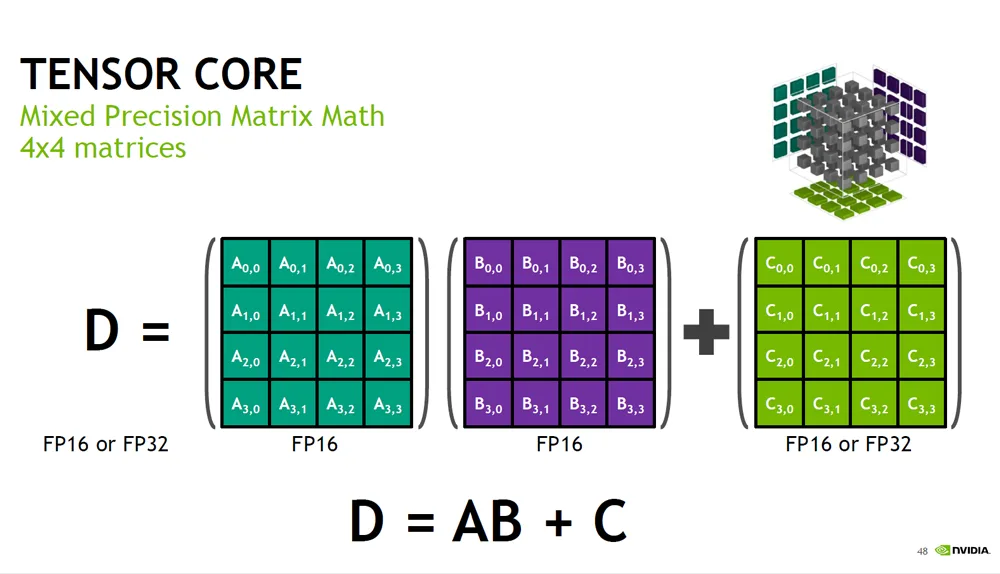

We have little idea yet how the new SMs will provide performance speed-up for Volta GeForce gamers. And something new for GV100 architecture are Tensor cores which work particularly well for Tensor deep learning Matrix calculations. Where these kinds of calculations are used, there will be significant speed up in the order of 8X or more over Pascal.

So what does this mean for gamers? Mixed Precision Matrix math is not used for PC gaming.

So what does this mean for gamers? Mixed Precision Matrix math is not used for PC gaming. GV100’s Tensor cores probably won’t be used for gaming as they are optimized for deep learning inferencing and training which is an important part of high performance computing (HPC). NVIDIA advertises the GV100 as “the fastest and most productive GPU for deep learning and HPC”. The GV100 will cost over $18,500 each in quantities of 8 when purchased as a Volta DGX-1 deep learning box in Q3.

GV100’s Tensor cores probably won’t be used for gaming as they are optimized for deep learning inferencing and training which is an important part of high performance computing (HPC). NVIDIA advertises the GV100 as “the fastest and most productive GPU for deep learning and HPC”. The GV100 will cost over $18,500 each in quantities of 8 when purchased as a Volta DGX-1 deep learning box in Q3.

Because this chip is so massive – 21 billion transistors on a 815 mm^2 die size which is at the reticle limit of TSMC – it is reasonable to assume that yields at first probably aren’t very good and NVIDIA will provide these brand new GPUs first to those in the scientific community and to business who find these fastest GPUs affordable and perhaps even indispensable to their work.  In Q4, the GV100 will become more widely available as Tesla and we can assume that if it follows Pascal’s pattern, we may even see a Volta V6000 GPU for Quadro announced, perhaps later this year. As for gamers, it is perhaps reasonable to speculate that we may see a ‘GV104’ – a cut-down and simplified GeForce gaming GPU without the deep learning instruction sets – available sooner rather than later.

In Q4, the GV100 will become more widely available as Tesla and we can assume that if it follows Pascal’s pattern, we may even see a Volta V6000 GPU for Quadro announced, perhaps later this year. As for gamers, it is perhaps reasonable to speculate that we may see a ‘GV104’ – a cut-down and simplified GeForce gaming GPU without the deep learning instruction sets – available sooner rather than later.

With Pascal architecture, GeForce video cards came less than one month from the GP100’s announcement at last year’s 2016 GTC to Editor’s Day in Austin where the GTX 1080 and GTX 1070 were announced. The architectural changes and refinements that makes Volta faster than Pascal for deep learning/AI, workstations and creation, and crunching numbers, will also likely make it faster when optimized for gaming and perhaps even more so for DX12 than Pascal is.

It is certainly possible that AMD’s Vega may end up facing Volta rather than Pascal. On the professional front, AMD has just released Vega as the Radeon Frontier Edition with availability next month, and consumer Vega Radeons evidently are to come in the second half of 2017. Of course, only NVIDIA (and AMD) knows their own timing, but it is very exciting for gamers to realize that a brand new Volta architecture for both deep learning, HPC, and for graphics has been announced and it will be shortly available for researchers and programmers with deep pockets.

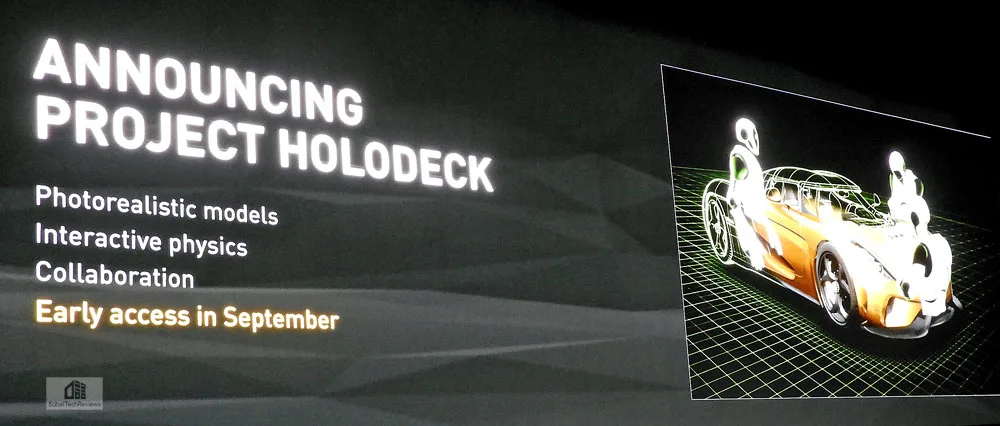

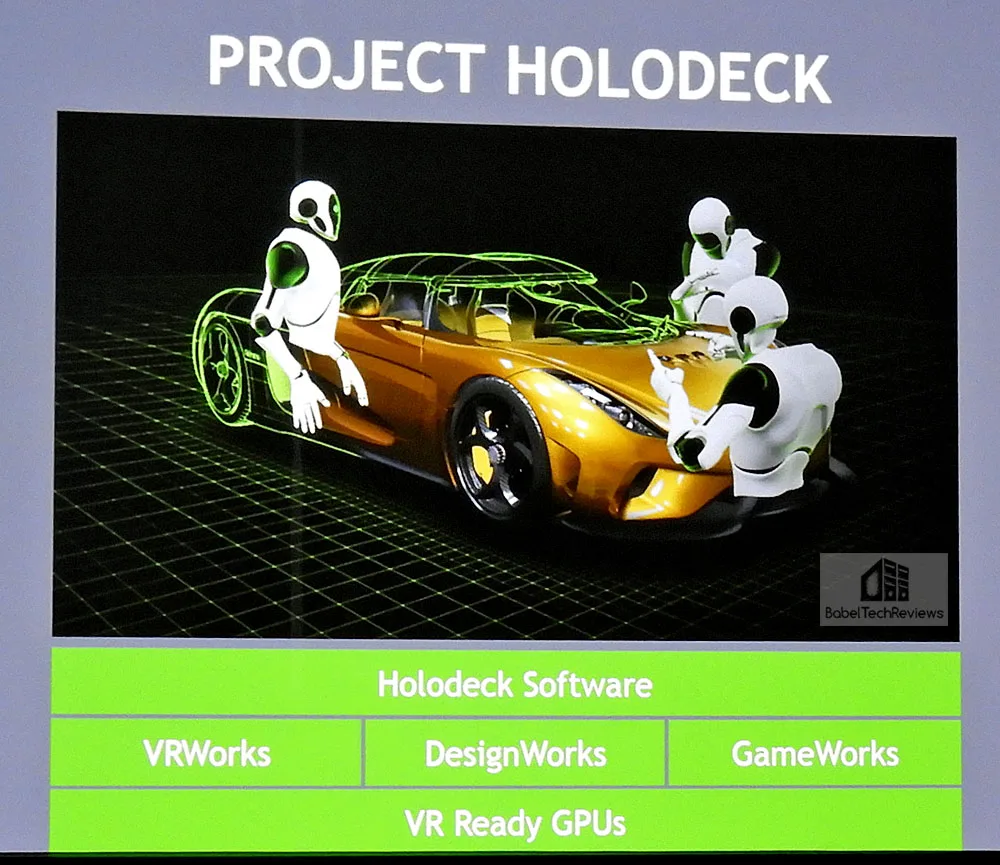

Some other big announcements were made at this year’s GTC that should interest gamers including NVIDIA’s Project Holodeck. NVIDIA believes that a holodeck is ultimately the future of VR and they are expressing their vision for collaboration in a way that will deal with photorealistic models, interactive physics including audio and haptic.

Although Project Holodeck is not a gaming-oriented project, eventually it could be massively multiplayer. It is based on the Unreal Engine and is as yet a concept, a demo of what can be – future possibilities.

As detailed in the professional meeting at the GTC 2017 covering Quadro products, the Holodeck software is based on NVIDIA’s existing technology including VRWorks, DesignWorks, GameWorks, and requires NVIDIA’s fastest VR ready GPUs of which no doubt Volta will play a future part. And a very big part of Holodeck Software will be based on VRWorks. Everywhere one looked at the GTC, Virtual Reality (VR) and VR demos were everywhere.

This time, the VR emphasis was less on VR games than at previous GTCs. Inside NVIDIA’s VR Village, this editor got to experience a London historic bank building that was recreated from photographs in VR as well as NVIDIA’s own headquarters under construction and during the building process using the Vive HMD.

Of course, VR demos still include gaming and BTR is shortly going to be benchmarking VR games much as we benchmark PC games, but now by using video benchmarking and NVIDIA’s FCAT VR benching tool.

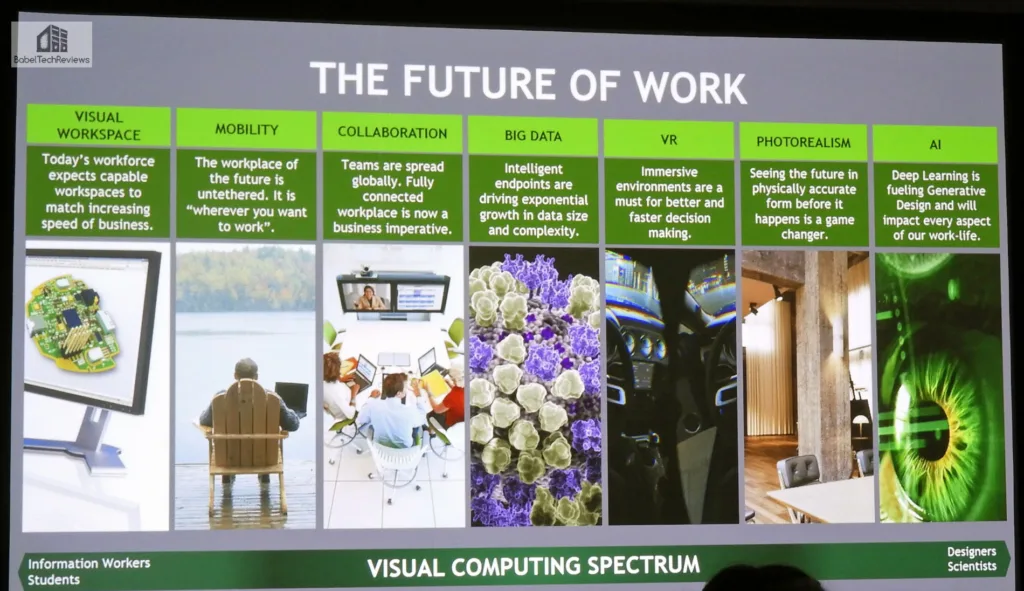

We were able to attend the press question and answer session, but NVIDIA officials refused to comment on the elephant in the room – the GeForce GPU that will ultimately be derived from Vega. The Quadro session detailed the “future of work” and it was noted that collaborative VR is not too far in the distant future.

The Quadro session detailed the “future of work” and it was noted that collaborative VR is not too far in the distant future.

Visual computing also includes gaming.

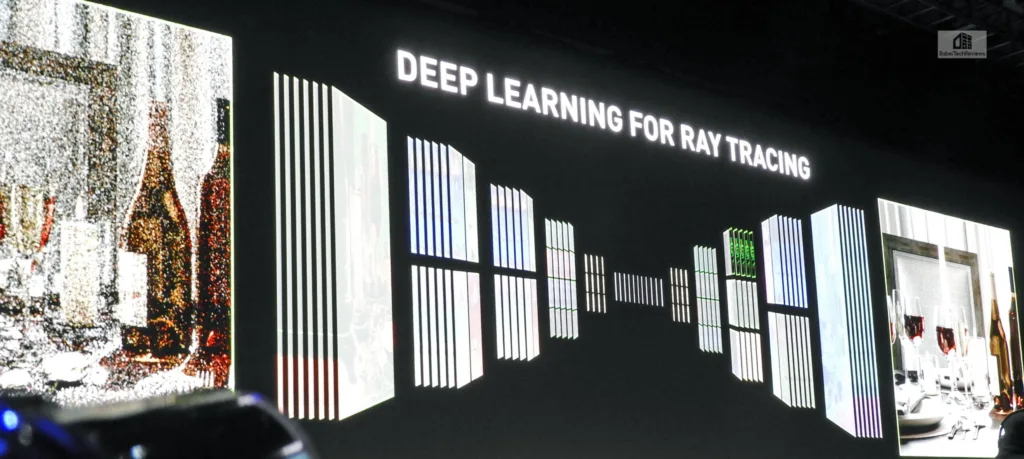

NVIDIA also showed off deep learning for Ray Tracing

Time is of the essence for most developers, and Ray Tracing takes a lot of time. By using deep learning coupled with AI/deep learning results in a production render approximately 4x faster than the usual process. This will be available for Volta as well as for Pascal-based Quadros.

Time is of the essence for most developers, and Ray Tracing takes a lot of time. By using deep learning coupled with AI/deep learning results in a production render approximately 4x faster than the usual process. This will be available for Volta as well as for Pascal-based Quadros.

One of the biggest announcements was regarding Toyota using NVIDIA’s Drive PX for their autonomous vehicles, a very big announcement indeed.

One of the biggest announcements was regarding Toyota using NVIDIA’s Drive PX for their autonomous vehicles, a very big announcement indeed.

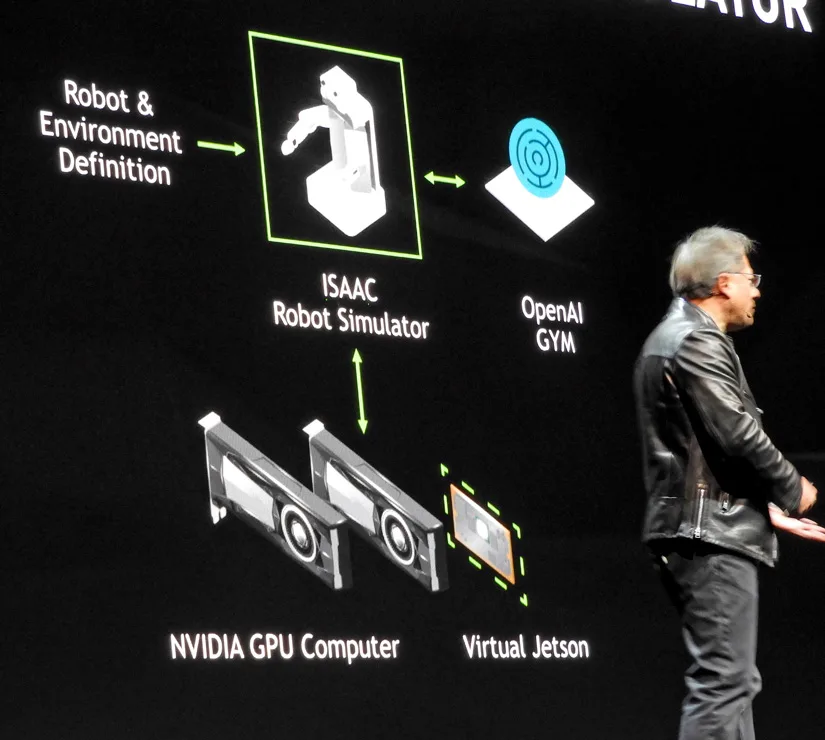

In addition to VR and Project Holodeck, NVIDIA’s Isaac advances training and testing of robots by using simulations. Before deployment, robots must be extensively trained and tested which can be expensive and impractical using physical prototypes and modeling all possible interactions between a robot and its environment.

The Isaac robot simulator provides an AI-based software solution for training robots in highly realistic virtual environments and then transferring what is learned to physical units. Isaac is built on Epic Games’ Unreal Engine 4 and uses NVIDIA’s simulation technologies. This robot simulator should be able to train robots much more quickly and with less expense than the current method.

We have had almost a week to think about GTC 2017, and this is our summary of it. Bearing in mind past GTCs, we cannot help but to compare them to each other. As gamers, we can be very interested in NVIDIA’s GTC even though it does not cover GeForce gaming directly.  The very first tech article that this editor wrote covered NVISION 08 – a combination of LAN party and the GPU computing technology conference that was open to the public. We are still hoping that NVIDIA will do this again. The following year, NVIDIA held the first GTC in 2009 which was a much smaller industry event that was held at the Fairmont Hotel, across the street from the San Jose Convention Center, and it introduced Fermi architecture. This editor also attended GTC 2012, and it introduced Kepler architecture.

The very first tech article that this editor wrote covered NVISION 08 – a combination of LAN party and the GPU computing technology conference that was open to the public. We are still hoping that NVIDIA will do this again. The following year, NVIDIA held the first GTC in 2009 which was a much smaller industry event that was held at the Fairmont Hotel, across the street from the San Jose Convention Center, and it introduced Fermi architecture. This editor also attended GTC 2012, and it introduced Kepler architecture.

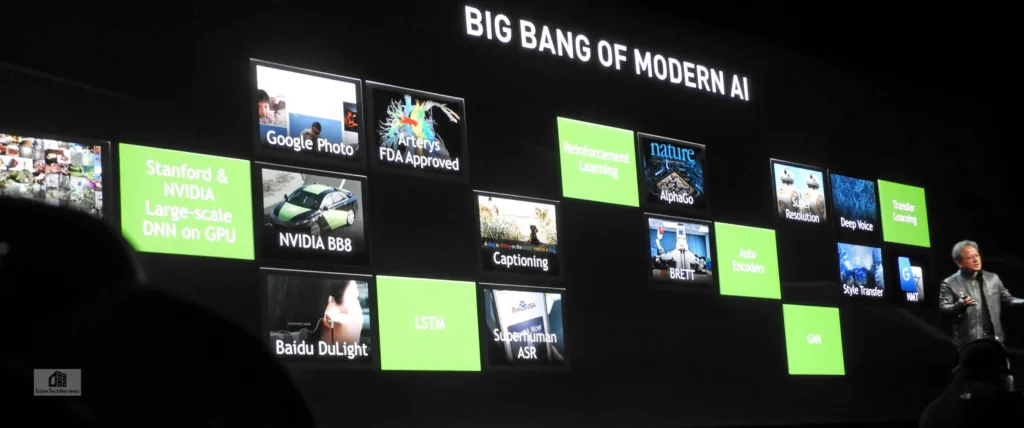

Just three years ago we were in attendance at GTC 2014 where the big breakthrough in Deep Learning image recognition from raw pixels was “a cat”. The following year, at GTC 2015, we saw that computers can easily recognize “a bird on a branch” faster than a well-trained human which demonstrates incredible progress in using the GPU for image detection . And at GTC 2016, we saw computers creating works of art, and we also saw a deep learning computer beat the world champion Go player, something that researchers claimed would take decades more to accomplish.

Just one year ago at GTC 2016, NVIDIA released the GP100 on brand new Pascal architecture and now we have Volta GV100 available next quarter for HPC which was announced just ahead of AMD’s new Vega Radeon Frontier Edition for HPC. We want to thank NVIDIA for inviting this gamer to GTC 2017 and we look forward to returning next year, March 26-29, for GTC 2018.

These are exciting times for gamers and BTR will stay up with very latest news regarding Volta and Vega. We have returned to benching Virtual Reality using NVIDIA’s FCAT VR tool together with video benching, and we will post the second installment of our long-delayed series, next week.

Happy Gaming!